When I heard that the Workflow founders had left Apple to start their own thing, I had the distinct feeling that future gifts were coming. Turns out I was right. They’ve started a new company, Software Applications Incorporated, where they are working on a Mac Automation tool that will use AI. This is precisely the kind of AI I’d like to use. I can’t wait to see what they produce.

Posts Tagged → artificial intelligence

Testing Lex, an AI-driven Word Processor

I’ve been hearing from a lot of folks about lex.page, a Google Docs-like word processor with AI tools baked in. While I’m not a fan of having AI write for me, I will use it to analyze and help me improve my words.… This is a post for the Early Access MacSparky Labs Members. Care to join? Or perhaps you need to sign in?

Is AI Apple’s Siri Moonshot?

The Information has an article by Wayne Ma reporting Apple is spending “millions of dollars a day” on Artificial Intelligence initiatives. The article is pay-walled, but The Verge summarizes it nicely.

Apple has multiple teams working on different AI initiatives throughout the company, including Large Language Models (LLMs), image generation, and multi-modal AI, which can recognize and produce “images or video as well as text”.

The Information article reports Apple’s Ajax GPT was trained on more than 200 billion parameters and is more potent than GPT 3.5.

I have a few points on this.

First, this should be no surprise.

I’m sure folks will start writing about how Apple is now desperately playing catch-up. However, I’ve seen no evidence that Apple got caught with its pants down on AI. They’ve been working on Artificial Intelligence for years. Apple’s head of AI, John Giannandrea, came from Google, and he’s been with Apple for years. You’d think that people would know by now that just because Apple doesn’t talk about things doesn’t mean they are not working on things.

Second, this should dovetail into Siri and Apple Automation.

If I were driving at Apple, I’d make the Siri, Shortcuts and AI teams all share the same workspace in Apple Park. Thus far, AI has been smoke and mirrors for most people. If Apple could implement it in a way that directly impacts our lives, people will notice.

Shortcuts with its Actions give them an easy way to pull this off. Example: You leave 20 minutes late for work. When you connect to CarPlay, Siri asks, “I see you are running late for work. Do you want me to text Tom?” That seems doable with an AI and Shortcuts. The trick would be for it to self-generate. It shouldn’t require me to already have a “I’m running late” shortcut. It should make it dynamically as needed. As reported by 9to5Mac, Apple wants to incorporate language models to generate automated tasks.

Similarly, this technology could result in a massive improvement to Siri if done right. Back in reality, however, Siri still fumbles simple requests routinely. There hasn’t been the kind of improvement that users (myself included) want. Could it be that all this behind-the-scenes AI research is Apple’s ultimate answer on improving Siri? I sure hope so.

Specific vs. General Artificial Intelligence

The most recent episode of the Ezra Klein podcast includes an interview with Google’s head of DeepMind, Demis Hassabis, whose AlphaFold project was able to use artificial intelligence to predict the shape of proteins essential for addressing numerous genetic diseases, drug development, and vaccines.

Before the AlphaFold project, human scientists, after decades of work, had solved around 150,000 proteins. Once AlphaFold got rolling, it solved 200 million protein shapes, nearly all proteins known, in about a year.

I enjoyed the interview because it focused on Artificial Intelligence to solve specific problems (like protein folds) instead of one all-knowing AI that can do anything. At some point in the future, a more generic AI will be useful, but for now, these smaller specific AI projects seem the best path. They can help us solve complex problems while at the same time being constrained to just those problems while we humans figure out the big-picture implications of artificial intelligence.

Addressing AI with Friends (MacSparky Labs)

You may have seen the news that the actor’s union is now on strike. This affects some of our friends and many more of my daughter’s friends since she is currently at UCLA in the Theater and Film School…

This is a post for MacSparky Labs Members only. Care to join? Or perhaps do you need to sign in?

Is ChatGPT Really Artificial Intelligence?

Lately, I’ve been experimenting with some of these Large Language Model (LLM) artificial intelligence services, particularly Monkey. Several readers have taken issue with my categorization of ChatGPT Monkey as “artificial intelligence”. The reason, they argue, is that ChatGPT really is not an artificial intelligence system. It is a linguistic model looking at a massive amount of data and smashing words together without any understanding of what they actually mean. Technologically, it has more in common with the grammar checker in Microsoft Word than HAL from 2001: A Space Odyssey.

You can ask ChatGPT for the difference between apples and bananas, and it will give you a credible response, but under the covers, it has no idea what an apple or a banana actually is.

One reader wrote in to explain that her mother’s medical professional actually had the nerve to ask ChatGPT about medical dosages. ChatGPT’s understanding of what medicine does is about the same as its understanding of what a banana is: zilch.

While some may argue that ChatGPT is a form of artificial intelligence, I have to agree that there is a more compelling argument that it is not. Moreover, calling it artificial intelligence gives us barely evolved monkeys the impression that it actually is some sort of artificial intelligence that understands and can recommend medical dosages. That is bad.

So going forward, I will be referring to things like ChatGPT as an LLM, and not artificial intelligence. I would argue that you do the same.

(I want to give particular thanks to reader Lisa, who first made the case to me on this point.)

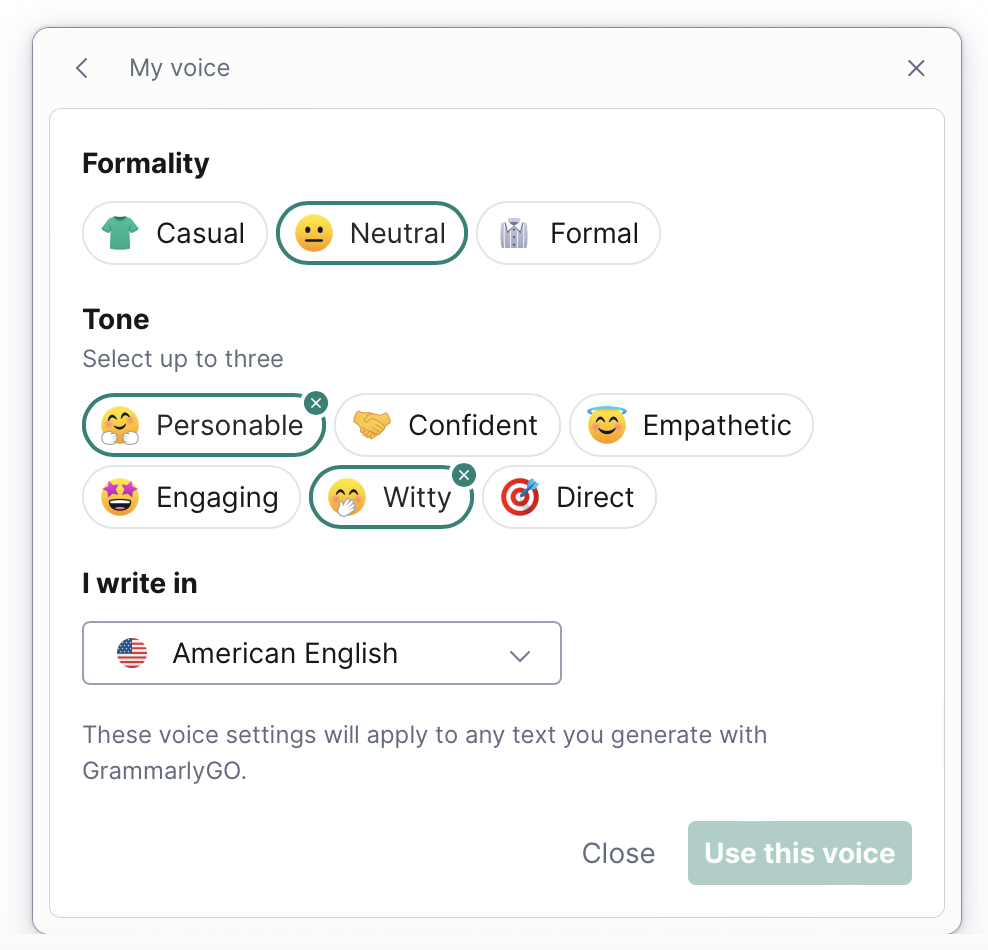

GrammarlyGO Brings in More AI

A recent update to Grammarly adds even more artificial intelligence: it’s called GrammarlyGO. I say “more AI” because Grammarly has always been an AI-based grammar-checking service. (There have never been humans there proofreading your work.)

GrammarlyGO brings it up a notch with the ability to adjust your voice and bring in other AI-based suggested checks. This feature lands for me as a valuable form of AI. Not to write for me but make my own words better. Grammarly incorporating AI like this makes total sense.

Experimenting with Google Bard (MacSparky Labs)

I’ve been spending some time experimenting with Google Bard, Google’s competitor to ChatGPT. It got a few things right and a few things wrong, but this is an easy platform if you want to experiment with large language model artificial intelligence… This is a post for MacSparky Labs Level 3 (Early Access) and Level 2 (Backstage) Members only. Care to join? Or perhaps do you need to sign in?

The Looming Threat of AI-Generated Voice

I read this post by John Gruber, and I couldn’t agree more about the shenanigans that will come from AI-generated deepfakes. The computers are so good at duplicating your voice at this point that a determined jackass could “produce” a tape of you saying anything. Conversely, an insolent jackass will deny an actual recording of him and claim it is a deepfake. Down is up. Up is down.

I don’t know that we’ll ever have “smoking gun” audio again. It’s just a question of time before that is true for video, too. The bad guys are certainly going to use this to further polarize us. Be warned.

Microsoft 365 Copilot

Last week Microsoft gave an impressive presentation demonstrating the incorporation of artificial intelligence into their productivity apps. You can have it summarize and analyze data in Excel, write better documents in Word, and even summarize email in Outlook.

Moreover, it had less of that wild west feel we are seeing in most of the artificial intelligence features added to existing apps. This was clearly thought out. It’s worth watching the presentation even if you don’t use Microsoft software.

I really think this is a step in the right direction. What I would ultimately like from artificial intelligence is for it to help me get my work done better and faster. So much of modern technology seems to get in the way of serious work, rather than assist it. If you’ve ever watched any of the Iron Man movies, Tony Stark always had Jarvis working in the background for him, handling little things so Tony could work on the big things. I want Jarvis.

Just think how much easier your life could be, if you had a digital assistant that could do things for you like:

- Manage calendars and schedule appointments

- Send and respond to emails

- Set reminders and alarms

- Make reservations and appointments

Seeing these initial steps from Microsoft gives me hope that Jarvis may show up sooner than I thought.