It’s time for the latest Lab Report from the MacSparky Labs, covering this week’s Apple news and rumors. In this episode: It’s Glowtime for Apple (and they name a new CFO), more iPhone 16 rumors ahead of the September 9 event, and a Labs Video rebroadcast all about the Action Button.

… This is a post for MacSparky Labs Members. Care to join? Or perhaps you need to sign in?

Sparky’s New Notebook Wallet

For some time now I’ve been jumping between a minimal wallet that just holds a few cards and a bigger wallet that holds my Field Notes. With the PLOTTER mini 5, I feel a lot like Goldilocks.

… This is a post for MacSparky Labs Members. Care to join? Or perhaps you need to sign in?

Focused 211: The Focus MOB

On this episode of Focused, Mike and I consider the role of Margin, Ownership, and Boundaries in the pursuit of living a focused life.

This episode of Focused is sponsored by:

The Rumored AirPods Max 2

Rumors continue to swirl around the idea of updated AirPods Max. 9to5Mac did a nice summary of the anticipated features with a new hardware version, including the H2 chip, Adaptive Audio, conversation awareness, and personalized volume. The current iteration of AirPod Max (now four years old) has been lagging behind some of the newer features in other versions of the AirPods.

I bought the original AirPods Max shortly after they launched, and I use them regularly. However, I rarely take them out of the studio. Sometimes I need really good audio as I edit screencasts and podcasts, and I am unable to or unwilling to use speakers. In these cases, they are excellent. (They’re also really good for listening to music louder than I want my wife to know I am listening to music.)

I went back and looked at my original impressions of these headphones, and most of it still holds true. I never really found the weight to be a problem. I sometimes have them on for two or three hours at a time, and it’s just not an issue for me.

Another use case for me with the AirPods Max is a backup set of podcasting headphones. I’ve had several occasions where I used them as monitors while recording shows, and they work just fine.

I don’t think I’ll rush to upgrade my original AirPods Max when the new ones come out, but some of those new features are tempting. Regardless, I hope we see the long overdue update to AirPods Max at the iPhone event next month.

Apple’s September 9 “It’s Glowtime” Event

Apple announced this year’s iPhone event for Monday, September 9 at 10:00 Pacific.

A few things about it:

- Monday is unusual for an Apple Event. My guess: they want to stay clear of the US Presidential Debate set for September 10.

- Mark Gurman reports it’s another pre-taped event. I wonder if Apple will ever go back to the live events. They spent a lot of money on those Steve Jobs Theater seats.

- For those of you in the MacSparky Labs, keep an eye on your email. I’ll set up a few events around the announcements.

The Chipolo Location Card

For a while now I’ve been looking for a good location tracker for my wallet. This just might be the one for me. Let me show it to you and see what you think.… This is a post for the Early Access and Backstage MacSparky Labs Members. Care to join? Or perhaps you need to sign in?

Things Organized Neatly

Mac Power Users 759: The New Overcast, with Marco Arment

Marco Arment joins Stephen and me on this episode of Mac Power Users to discuss his podcast app for iOS, Overcast, which just received a major rewrite for its 10th anniversary. We talk about that project, how he thinks through user feedback, and Apple’s annual release cycle.

This episode of Mac Power Users is sponsored by:

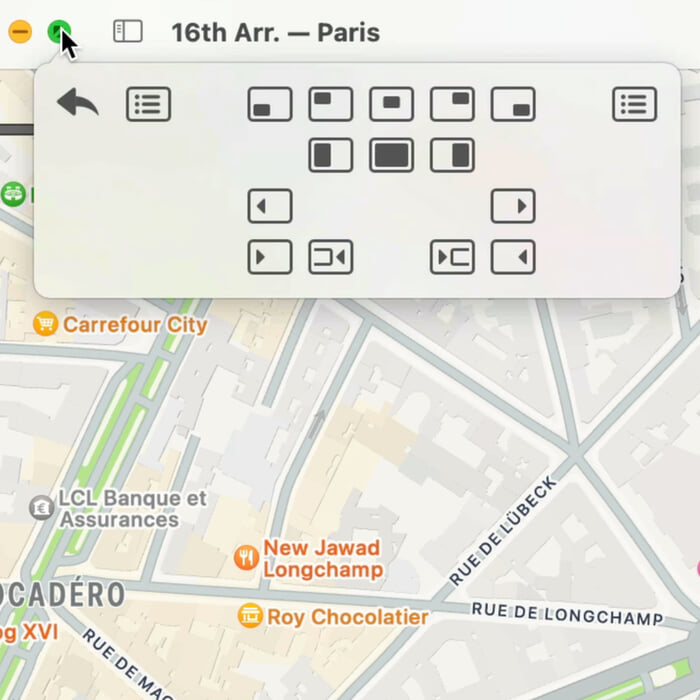

Moom 4

One of the best ways to remain productive on your Mac is through window management. There are many ways to do that, but for years now, my favorite has been Moom, which just got a nice update to version 4.

Moom has always been a favorite for quickly resizing and positioning windows, but the new version takes things to a new level. The hover-based pop-up palette is still there, but it’s become more powerful. With a simple mouse gesture, you can snap windows to predefined areas. Click, drag, and resize windows with pinpoint accuracy. It also lets you snap windows to edges and corners.

One of Moom 4’s standout features is the ability to save and restore window layouts. This is particularly useful for those of us working across multiple monitors or juggling different projects throughout the day. I’m a big fan of Mac setups, and this new version of Moom makes it so easy.

Moom also allows you to create custom commands, which can be triggered via hot keys. Imagine chaining a series of window adjustments to a single keystroke. You can also move, resize, and center, without touching your mouse.

The upcoming Sequoia release has the best iteration of window management Apple has ever shipped. That may be enough for many folks, but Moom 4 really takes it to the next level.

Automators 162: Smart Home Feedback

In this episode of Automators, Rosemary and I respond to some listener feedback and share updates on smart home technology and solutions.