It’s time for the latest Lab Report from MacSparky, covering this week’s Apple news and updates…This is a post for MacSparky Labs Members only. Care to join? Or perhaps do you need to sign in?

Contextual Email

I’ve been trying an experiment where I sort my email contextually. It’s working for me and in this video I share the details.… This is a post for the Early Access and Backstage MacSparky Labs Members. Care to join? Or perhaps you need to sign in?

Focused 188: Q4 Planning and the PKM Stack

Join Mike and me on this episode of Focused as we revisit the topic of toxic productivity, discuss choosing apps and tools that support your intentions, and share our plans for the last quarter of 2023.

This episode of Focused is sponsored by:

- Electric: Unbury yourself from IT tasks. Get a free pair of Beats Solo3 Wireless Headphones when you schedule a meeting.

- Zocdoc: Find the right doctor, right now with Zocdoc. Sign up for free.

- CleanMyMac X: Your Mac. As good as new. Get 5% off today.

- Indeed: Join more than three million businesses worldwide using Indeed to hire great talent fast.

Exploring Default Folder X 6

Default Folder X has long been my go-to app for upgrading the save and open dialog boxes on my Mac. With version 6, Default Folder X got several new tricks, and I demonstrate some of them in this video.… This is a post for MacSparky Labs Members only. Care to join? Or perhaps do you need to sign in?

Mac Power Users 713: Photography with Tyler Stalman

Tyler Stalman joins Mac Power Users to share the details on the iPhone 15 and iPhone 15 Pro camera systems. Tyler also shares some of his favorite tips for better iPhone photography and videography.

This episode of Mac Power Users is sponsored by:

- TextExpander: Get 20% off with this link and type more with less effort! Expand short abbreviations into longer bits of text, even fill-ins, with TextExpander.

- Electric: Unbury yourself from IT tasks. Get a free pair of Beats Solo3 Wireless Headphones when you schedule a meeting.

- Zocdoc: Find the right doctor, right now with Zocdoc. Sign up for free.

- Factor: Healthy, fully-prepared food delivered to your door.

Automators 138: Shortcuts Utility Apps

On this episode of Automators, Rosemary and I cover some of our favorite Apps that add additional features to Shortcuts.

This episode of Automators is sponsored by:

- ExpressVPN: High-Speed, Secure & Anonymous VPN Service. Get an extra three months free.

- TextExpander: Your Shortcut to Efficient, Consistent Communication. Get 20% off.

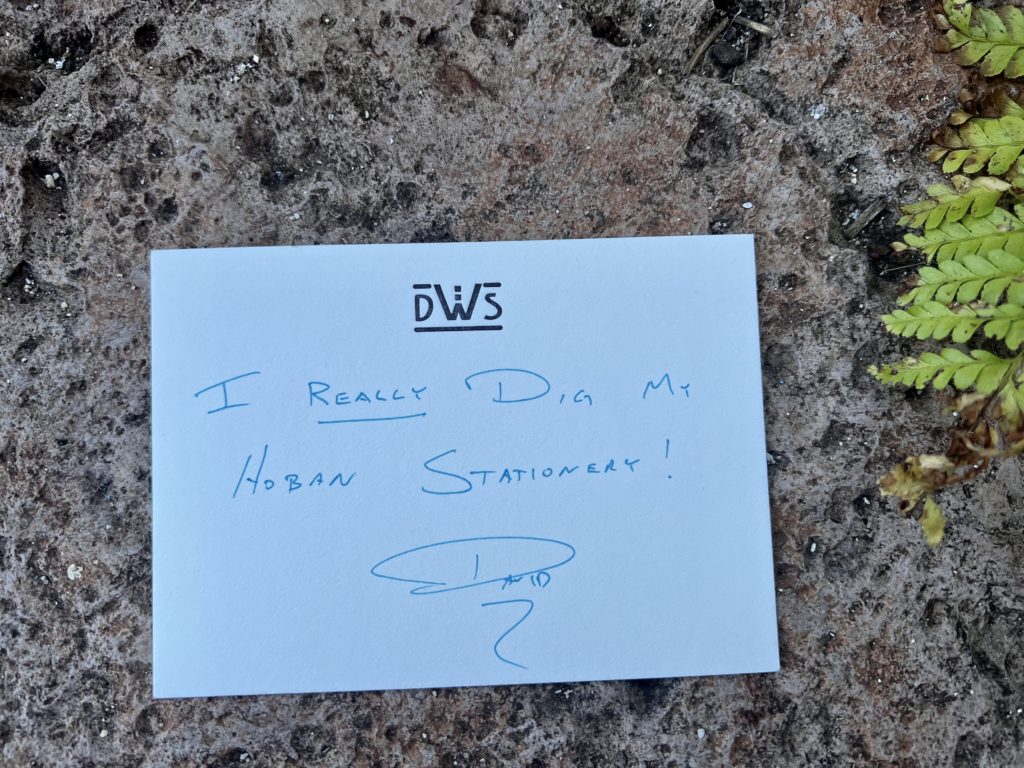

Hoban Cards and Stationery (Sponsor)

This week, MacSparky is sponsored by Hoban Cards, where they use a 1902 letterpress machine to make cards that your colleagues, clients, and customers will never forget. I sure love mine.

Evan and the gang at Hoban Cards are masters at the craft of designing and making letterpress calling cards and stationery. They have some beautiful templates to choose from, or you can roll your own.

I love handing out letterpress cards. It is always a conversation starter. Hoban Cards is where I go to buy them, and it is where you should too. Throw out those ugly, conventional, mass-produced, soulless business cards and reach out to Hoban Cards.

If you’re set on calling cards, I also recommend going to Hoban for your stationery. I bought stationery from them years ago, and I love sending it to friends and family. In a world full of text messages and email, personal stationery sends a whole different message altogether.

Best of all, use ‘MacSparky’ to get $10 off any order. Get yours today.

The Lab Report – 6 October 2023

It’s time for the latest Lab Report from MacSparky, covering this week’s Apple news and updates…This is a post for MacSparky Labs Members only. Care to join? Or perhaps do you need to sign in?

MacSparky Labs Deep Dive • September 2023

For this Deep Dive meeting, we shared our first impressions on iOS 17 as well as the new hardware announced by Apple during the September 12 event.…

This is a post for MacSparky Labs Level 3 (Early Access) Members only. Care to join? Or perhaps do you need to sign in?

MurmurType for Simple Dictation

I’ve heard from some Labs members that want to do more with dictation on their Mac, but are getting hung up on the fact that the words appear on the screen as they speak. …

This is a post for MacSparky Labs Level 3 (Early Access) and Level 2 (Backstage) Members only. Care to join? Or perhaps do you need to sign in?